Writing enterprise-level software is a challenging task in general but creating a trading system is even harder as it involves a combination of two critical requirements: 100% reliability and extremely high performance. This article highlights two bulletproof architectural principles well suited to a trading application, namely the LMAX Disruptor and the Project Reactor.

As with every other financial software system reliability is a must. However when compared with core banking there are not any particularly high requirements in terms of performance as most of the transactions are still batch processed overnight. A trading application, be it an exchange or an order and execution management system like AlgoTrader, needs to process orders in microseconds, some of today’s exchange matching engines even work with nanosecond precision.

LMAX Exchange

LMAX is a London-based FX Exchange. Serving many institutional clients, LMAX has very high requirements in terms of performance, scaling and quality of service. Trades need to execute extremely fast, and they also have to happen (with equally low latency) for the huge amount of concurrent users seamlessly.

With this goal in mind and a desire to essentially become “the world’s highest performance financial exchange” LMAX engineers considered and prototyped several approaches for its system design. After a period of evaluation and profiling they concluded that the conventional approaches currently available have fundamental limits in performance. After investigating deeper into the domain and thanks to the understanding of how modern hardware works (an approach they call “mechanical sympathy”) they came up with a system that was not only truly revolutionary and unique at the time of its conception, but also extremely fast.

LMAX’s architecture and design revolves around two crucial things: 1) the entire business logic must be implemented to work in a single-threaded process as a simple but highly efficient code and 2) there needs to be a way to feed this process with external events as reliably and efficiently as possible, all while having the infrastructure and auxiliary processes parallelized and decoupled from the main logic. What the engineers ended up with is an inter-process concurrency and messaging pattern they later called “LMAX Disruptor”.

LMAX Architecture

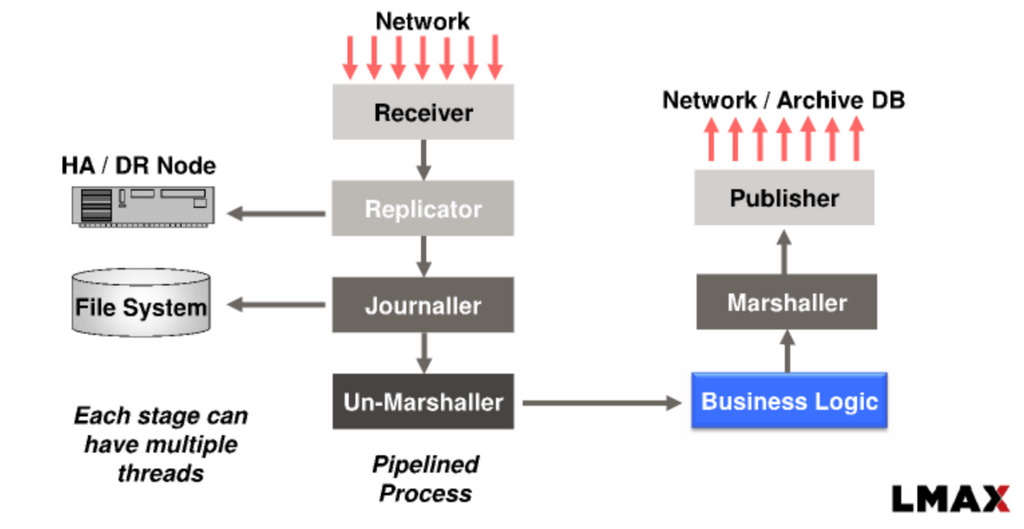

The high-level LMAX Architecture (depicted below) can be generally described by looking at a breakdown of its components:

- a receiver collects incoming requests from the outside world.

- a replicator sends out the events to HA and DR nodes in case the main system goes down.

- a journaller persists the incoming data onto persistent storage

- an un-marshaller deciphers the messages so that they can be picked up by the business logic.

- a business logic processor runs all domain-driven operations and outputs result events.

- a marshaller encodes the outgoing events so that they can be published back to the customers and propagated to the back-office systems for archiving and other purposes.

Since the pipeline is staged and stages are supposed to be processed and fed concurrently by multiple threads (apart from the main business logic, which is intended to be processed sequentially, event-by-event), coordinating the processing pipeline becomes critical from an orchestration and performance perspective.

In fact, as LMAX engineers discovered, the coordination itself proved to be the biggest bottleneck and source of latency when done in a conventional way. After digging deeper into the problem, the queue data structure — which is usually the underlying mechanism to coordinate events passing between stages (effectively two or more processing threads) — was identified as the main cause of latency.

Even further investigations led to an interesting realization that the natural state of queues in a running system is to always be either almost empty or almost full. A balanced middle ground with an evenly-matched rate of production and consumption is very rare, due to natural differences in pace between consumers and producers. This inherent problem of queues is a write contention between multiple threads. Even if the system operates very smoothly and elements are taken out of the structure right away, a contention is still produced since there will always be at least two threads writing to a single head node of the queue at the same time. Adding multiple producers and consumers makes things exponentially worse. After discovering the main cause of latency and unreliability in the system, LMAX engineers managed to resolve it by introducing a much more suitable data structure and a protocol for operating it, a pattern they called the Disruptor.

“Our performance testing showed that the latency costs, when using queues in this way, were in the same order of magnitude as the cost of IO operations to disk (RAID or SSD based disk system)–dramatically slow. If there are multiple queues in an end-to-end operation, this will add hundreds of microseconds to the overall latency. There is clearly room for optimization.”

LMAX Disruptor Technical Paper

Disruptor pattern and RingBuffer

The LMAX Disruptor is a design pattern that enables a separation of concerns between producing events (by single or multiple producers), processing them by consumers and coordinating the work between them. It inherently supports concurrency, and does so in an effective way, without the need for synchronization mechanisms like locks or even CAS (compare and swap, a non-blocking synchronization algorithm) operations in a single producer scenario (in a multi-producer scenario, CAS coordination is required).

At the heart of it is a concurrent data structure, called a RingBuffer which, if used correctly, greatly outperforms a design that traditionally relied on queues to achieve the same result.

Producing into RingBuffer

A producing thread doesn’t need to acquire a lock to be able to write to the node. It only needs to know which slot is available next for writing. A single-producer scenario soley requires a memory barrier mechanism (such as a volatile variable) so that the producer sees the last update of the RingBuffer index. In multiple-producer scenarios these will be coordinated with CAS operations, which are still an order of magnitude more efficient than using locks.

Multiple (independent) consumers

Consumers that can work independently of each other obviously do not require any additional synchronization between each other, they can simply progress on events as they become available. Again, a single volatile variable acting as a memory barrier is required so that consumers can track the RingBuffer index to find the next available slot.

This is the area where a good understanding of the business domain and having a breakdown of internal processes really pays off in regards to performance. Taking LMAX as an example, they knew exactly which of their processes could work in parallel without a need for any additional synchronization between them. Every event, before it is dispatched to the business logic processor is concurrently replicated, journalled and unmarshalled by 3 independent workers. The RingBuffer structure allows each of them to consume without writing into the structure itself, hence eliminating contention.

Business logic consumer

Business logic is the main part of the application. In the case of LMAX it is the exchange’s matching engine. Its sole responsibility is to process each order received. For each new order, it examines the order book to see if there is a matching countervailing order. If this is the case, it will execute the order immediately against the matching order. If not, it simply adds the order to the book. With persistence and thread-safety requirements removed, this part can progress as fast as possible, the only limitation is the actual efficiency of algorithms and data structures used by the programmer. Since the code is cleaned and more predictable, this also helps JIT further optimize the compiled code in run-time.

So how exactly are these events fetched from the RingBuffer into the business logic? Each auxiliary consumer also maintains its own counter. It is important that its state is visible to others, so it is also a volatile variable. The business logic consumer is the main process interested in the progress of other consumers. As opposed to other processes, it doesn’t care about the index of the RingBuffer itself. Instead, it is only concerned about the minimum index of the node that was already processed by all of the other consumers. Business logic can only progress on an event that was first journalled, un-marshaled and replicated. It observes all other consumers so it knows how much it can progress at a time.

Possible system-level optimizations

All above characteristics allow for a few interesting things to happen:

- As opposed to a staged pipeline model, when optimizing for performance, developers only have to focus on the single, slowest event consumer in the system at any given time. The element of the system with the poorest performance is identified quickly and it will be much easier to work on improving its performance in isolation, without having to worry about any of the coordination or infrastructure problems.

- If the process is still slow after optimizations there is nothing that prevents it from scaling by simply adding more workers of the same type, since the auxiliary processes are working independently on a per-event basis. For example, if the un-marshalling becomes a bottleneck, this could be resolved by adding a couple of additional un-marshalling consumers and have the work split between them.

- Another natural optimization exposed by this design is batching. The design takes advantage of the batching effect by exposing the ability to progress on events not only one by one but in batches. If a consumer sees that there are N events awaiting processing, it can now choose to process all of them at once instead of one by one.

- For example if the journaller process was stuck for a while and the rest of the system has moved on with N events in meantime, whenever it gets unstuck, it cannow try to catch up by batch processing all of the remaining events at once. This is a highly efficient strategy for sequential and IO-bound operations. On top of that, since all events are also laid out in memory next to each other, they will be fetched by the CPU in a single read operation, which further improves performance. Finally, by knowing that the coordination and auxiliary processes should not be a bottleneck anymore (and having all above means to optimize them on the infrastructure level) the biggest engineering focus is now on the business logic itself. Not only that, the entire business logic, stripped of infrastructural problems, can be now developed in a plain, domain-driven design manner. This is a hugely advantageous situation to be in and in fact a situation that every OO programmer dreams of: a single-threaded, completely in-memory, highly testable and deterministic process. Disruptor infrastructure guarantees that all events will always arrive sequentially and in order. Moreover, if a bug is discovered in production, it can be easily replicated in the development environment by simply replaying the events in their original order (the sequence in which they were persisted by the journaller). Another advantage when writing simpler, cleaner and shorter code is the predictability of execution paths–something that will further boost performance, since such code will contain instructions that are much friendlier for the JVM or CPU to optimize.

What if you override the RingBuffer?

With well-optimized components and a big enough RingBuffer, it is highly unlikely that there will be a situation when there is no clean slot in a buffer, a situation when the producer would wrap around and reach the slot that wasn’t progressed yet by the slowest consumer.

But–what would happen if the peak traffic is so high that such a scenario occurs? When the producer tries to update with a new event, it sees both the RingBuffer counter and all of the consumer counters. If it sees that an unprocessed entry would be overwritten, the entry is not inserted and the producer effectively blocks. This results in a natural back pressure, a mechanism that guards the system from overloading and ensures that it operates smoothly and predictably even at a high load. In other words, if the system is so busy that adding more events would affect the rate of processing (further buffering would now increase latency to the undesirable levels for a client who originated the event) further incoming requests will simply be rejected.

Such behavior actually gives the Disruptor the same characteristic as if a blocking queue would be used but without all the drawbacks of using it. This is also a desirable characteristic for a serious, production-grade trading system. In the end, it is much better to serve a capped number of users with constant, high-quality service and with the lowest possible latency than to allow unlimited access for everyone to enter and eventually crash the system or make it run at an unpredictably slow pace. Especially for a fast-changing environment like an FX exchange, a critical requirement is being able to execute orders fast, particularly if you want to attract market makers.

Unfortunately this problem is omnipresent with many of today’s crypto exchanges. In times of high volatility these exchanges attract such a high number of traders that response times become unpredictable (seconds to execute an order) and in the very worst case lead to entire system failures. At AlgoTrader we believe system reliability should not depend on the asset class traded and therefore we’ve picked software development principles that allow us to offer the highest quality of service and performance with any asset class.

Mechanical sympathy

LMAX engineers often refer to the term “mechanical sympathy”. It is one of the main philosophies that influence their design process. A couple of examples below describe what makes the Disruptor so incredibly fast:

CPU cache friendliness

CPU performance is not only about clock speeds and number of cores anymore. While the GHz race is over, there have been a lot of advancements regarding CPU caches. Over the years these have become not only bigger but also smarter and faster. Being aware of how to write cache-friendly code can greatly improve performance and the LMAX design takes advantage of it. For example:

- The memory for the entries is fully pre-allocated from the start, at the RingBuffer initialization phase. Since it all happens at the same time, it is highly likely that all data will be laid out contiguously in the main memory which then optimizes for memory access in runtime. CPUs are highly efficient when reading data from caches sequentially as opposed to a random read. No pre-allocation on startup would also induce a lot of cache misses and false-sharing (when two separate CPU cores are trying to read the value from a single cache line at the same time) problems which further degrade latency.

- The RingBuffer’s sequence numbers and its entries are padded to 64 bytes. That is because when the CPU reads data from cache, it reads it not in words, but in so-called cache lines, chunks of data that take 64-bytes (depending on a CPU architecture, but a modern one would use 64 bytes). Having the sequence numbers and entries padded ensures that the whole entry will be pulled in from memory at once, which eliminates false sharing completely.

GC friendliness

GC pauses can be a significant source of latency if the system produces a lot of garbage, or if it produces it in a pattern that is not suitable for the garbage collector implementation configured to run along with JVM. This is another incentive to pre-allocate the entire RingBuffer data structure during its initialization phase. Since the entire data structure together with its nodes is pre-allocated from the beginning and the producer never actually creates new nodes, only updates its values, this makes the nodes effectively immortal from the GC perspective.

That’s the LMAX Disruptor. Now let’s look at reactive programming and Project Reactor as an alternative for the LMAX Disruptor.

Reactive programming and Project Reactor

While the Disruptor made a great solution for LMAX itself, it isn’t a silver bullet solution for every trading system. The Disruptor assumes only a single business logic processor is needed, but what if we need more than one? What if a system’s business logic is more complex, containing several equally important business logic processors rather than a single-order matching engine? What if they all work at their own pace to produce and send events to each other? In that case, if one of the subsystems is slower it will be overwhelmed by events coming in from the faster one. With Disruptor we could simply drop excessive events when they represented orders we could not or did not want to process. But what if we would like that event to eventually be processed and have it arrive at a slower consumer, perhaps at a later time when it is less busy? Or maybe we would like to set the pace of events produced to match processing capabilities exactly thereby making both parties work as efficiently as possible.

These are the kind of problems a quantitative trading and order and execution management system like AlgoTrader needs to solve and the kind of problems reactive programming is capable of solving.

Reactive programming

Reactive programming is an event-driven paradigm that is solely focused on the propagation of change. It shares some goals and philosophies with the Disruptor pattern for example, that the business logic is the main focus for the developer and that most of its processes are executed in a single-threaded context by default, however reactive programming has a broader and higher-level perspective.

Events

An event is a first-class citizen of the reactive programming world. It is a way to propagate data between producers and consumers. Everything can be an event. Events do not only refer to messages exchanged between actors but also signals such as subscription requests, cancellation signals, stream completion events and errors. There are, in fact, no exceptions used in reactive programming. If anything goes wrong the event will be propagated back to the consumer, as an error event, so it can decide how to react.

Producer vs. consumer

The producer and consumer are equally important actors in the reactive world. The producer emits events for the consumer, which observes them asynchronously. However, it is the consumer who drives the subscription. Firstly, nothing happens until the consumer subscribes for events. Secondly, although the producer emits events asynchronously, as soon as it has one ready to emit, it will always conform to the demand from the consumer. The transmission will only occur for the number of events requested by the consumer. This naturally solves the fast producer-slow consumer scenario and ensures the consumer is never overwhelmed by too many events.

Streams of events

Reactive programming does not focus on a single event but rather on an entire stream of events as well as the characteristics of those streams. The ability to observe a stream of events as a whole allows the developer to easily model a business logic that also observes and reacts to things, like the timing characteristics for example.

If needed, reactive programming is a good toolkit for identifying and answering various questions:

- If the expected event is late, what are the gaps between event emissions?

- How many events get produced in a given time interval?

- How does an aggregated view of a specific stream look in a given time frame?

Reactive pipeline

Operator chaining is a natural technique used in the reactive world. An operator is a piece of logic put between the producer and consumer. It allows for the manipulation of the flow of events and the subscription itself. In reactive programming it is common to build a business logic flow that is chained together by operators. These operators can decorate, manipulate, filter, group, sample or buffer data.

There is one important caveat to keep in mind when building a system that is effectively a single reactive pipeline. In more traditional setups a staged-event system can easily become vulnerable: whenever one of its parts stalls, everything stalls. This is the main reason why everything happens inside a single business processing node in Disruptor.

How does the reactive paradigm solve this? Firstly, all operations done in a pipeline are expected to be non-blocking by default thus ensuring smooth transitions between stages. Secondly, whenever something more time consuming or inherently blocking needs to be done (for example a REST call to an external system or any other IO, like a read from the disk) it will be processed asynchronously. The result comes back to the processing loop as another event, ensuring that the other parts of the system remain unblocked and can proceed in meantime.

Threading model

When the entire stream is non-blocking and asynchronous the focus moves away from threading logic back to a business-domain one, as with the LMAX example. In fact, when the entire system is purely reactive it is possible to get away with running it on a single thread while remaining highly scalable. Even when a number of integrations with external systems (that are not reactive) is expected, high scalability is still achievable, since all blocking operations can be easily scheduled on an external thread pool without affecting the main reactive process.

Scheduling work is an idiomatic way of telling the reactive system to run something in a particular threading context. As is the case with LMAX’s Disruptor, by default everything happens on a single (usually main) thread as it is the most effective way to ensure no context-switch as well as synchronization between all threads. However it is still possible to take advantage of a multi-threaded environment if necessary:

- If parallelized execution is required or preferable, it can be achieved by dispatching work to a pool of threads. The results are then brought back into a sequential format with the help of a single operator.

- Similarly, if a blocking IO operation needs to be carried out, a single operator can reschedule it to work on a separate pool of threads.

This results in a much smaller number of threads required to run a highly scalable and responsive system. As a result, the need for a thread-per-request approach is eliminated. This makes thread management much less important and keeps the main flow single-threaded. Thus, the main development focus is on what matters most: the business logic.

Use cases and benefits of reactive programming

Backpressure

As previously mentioned, a slow consumer will never be overwhelmed by a fast producer if they are reactively communicating with each other. This maximizes efficiency of both components where the consuming component always works at its full capacity and producing one utilizes resources more efficiently because it no longer produces redundant events.

Business domains that rely heavily on streaming data

Reactive programming naturally attracts business domains that are mostly concerned with data (represented as streams of events) that need to be processed in real time. A prime example is the processing of market data in financial and trading systems. Whenever market conditions change, the observing logic will be notified so that it can quickly react. A reactive library is an effective toolkit to work on streaming problems in a natural way.

High scalability requirements

Thread-based architectures just don’t scale that well. There is a limit to how many threads can be created, fit into memory and managed at a time. The reactor pattern removes this problem by simply demultiplexing concurrent incoming requests and running them, on a (usually) single-application thread, as a sequence of events.

Determinism and replayability

Since everything is an event, it can be stored and replayed if needed. Lack of concurrency between events also ensures determinism and makes code much cleaner and more efficient to run. The same is true for LMAX’s Disruptor pattern.

Focus on the element of time

The notion of time plays an important role in reactive applications. It is possible to learn more about events based on their timing, for example a stale event may not be important anymore, or the event may be only important if it shows up frequently enough. Reactive libraries also allow greatly improved testability of the time-sensitive operations. For example, Project Reactor makes it very easy to decouple a context from its timing. Since it is represented in a Scheduler which can be switched, a real-time Scheduler can be used in production, while a Scheduler with artificial timing can be adopted in a test environment.

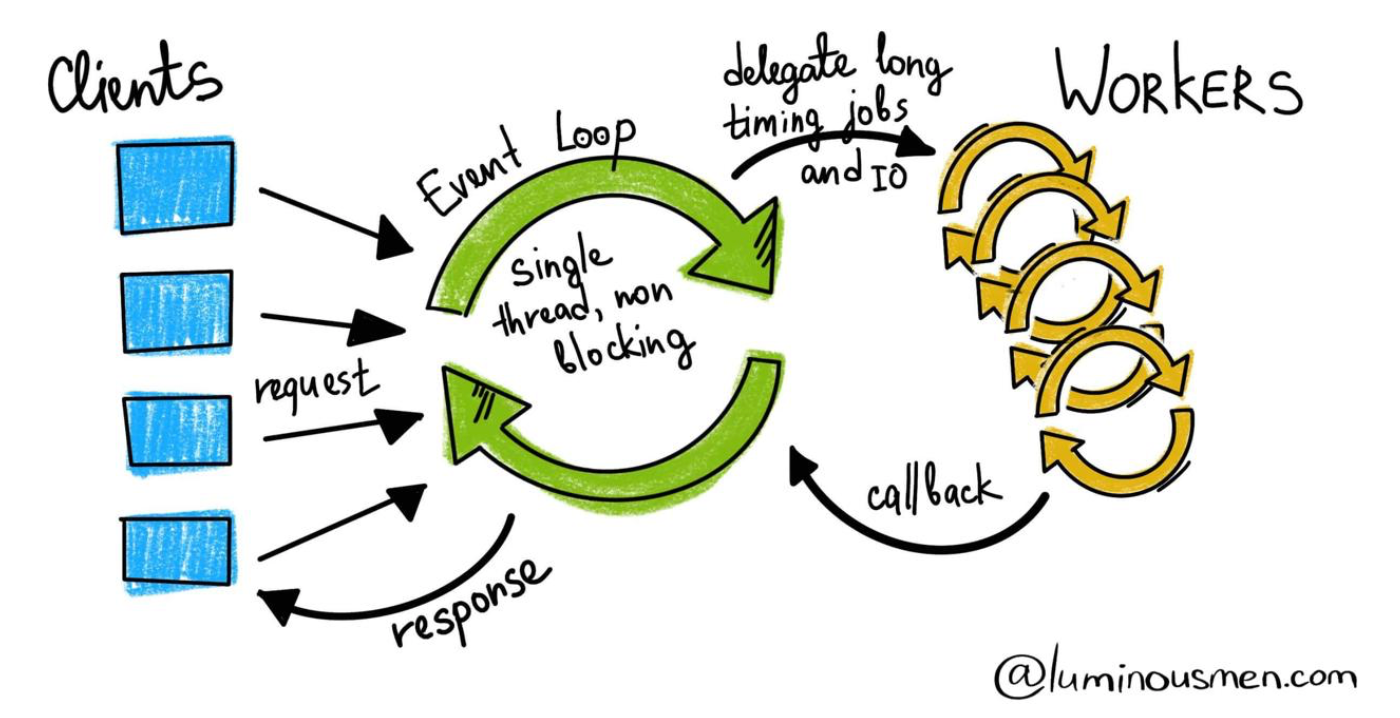

Reactor pattern

It is best to describe the underlying Reactor pattern with the diagram below:

Multiple concurrent requests are first demultiplexed and inserted sequentially into a single-threaded event loop which resembles the LMAX Disruptor RingBuffer. The loop is responsible for invoking business-logic operations that may be either instantaneous or blocking. Everything that is known to be blocking is dispatched out of the loop and the result is then propagated back into the loop asynchronously.

Reactive programming model viewed from the LMAX Disruptor perspective

Events vs asynchronous streams of events execution

The LMAX Disruptor allows us to build systems with single-threaded business logic processes that focus on a single event at a time. The greatest focus is put on maximizing execution performance, where both throughput and latency play an important role. This is done by going down to the machine’s bare metal, at least as much as JVM allows. This approach is very well-suited to an exchange-system application like LMAX, that usually has to do a single job at a time (match orders) at high speed.

Reactive programming on the other hand is focused on the entire streams of events and ways of interacting with them. It is much better suited to solve more complex business problems where a broader operating context is required. If we are not only concerned about a single event, but everything happening around it–the timing of the event, how it compares to events that arrived before it or how the situation would look when combined with other streams of events–then a reactive approach is the most natural choice. Reactive programming also puts a strong emphasis on performance. On a micro level it makes use of highly efficient data structures to pass events between producers and consumers (in fact, LMAX’s RingBuffer is one of them) and on a macro level it ensures maximum utilization of independent processes by regulating the load between them.

Push-pull backpressure & asynchronicity

Another virtue of reactive programs is that they have the context of external actors and interoperability with external systems built-in on a protocol level.

While Disruptor leverages backpressure so that the system accepting events is never overwhelmed with high traffic (by dropping excessive events if necessary), reactive programming takes it a level higher by making backpressure a first-class concern.

This approach allows us to model not only single-process or machine applications, but also entire distributed ecosystems that are fully reactive. Its backpressure capabilities are also much more robust, if a producer is too fast we no longer have a single option of dropping excessive events, we may elect a more suitable strategy, like buffering, replaying, or sampling.

Single-threaded, non-blocking composition

While the Disruptor pattern is all about efficiently feeding a single business logic process from a multi-threaded environment, reactive programming is about composing them. It is, of course, possible to compose a system built from multiple Disruptors feeding each other, but it is not recommended. Since it would lack the consumer who drives backpressure, such a system would be built out of components that do not respect each other’s consumption rates. It would be much harder to maintain an efficient and smooth flow of events, which in turn could diminish all performance efforts achieved by Disruptor. Therefore if many integrations with external systems are expected (and hence, many blocking calls to external services) asynchronous programming with a reactive approach is a more viable option.

Project Reactor and its optimizations

At AlgoTrader, we have elected Project Reactor from VMWare to be used as our toolkit and a library for designing reactive components of our system. While we considered a few other contestants, Pivotal’s implementation presented itself as one of our most viable options, especially since we are already relying on the Spring’s ecosystem, it seemed as a natural choice.

While all reactive libraries out there are quite similar to each other (in the end, they all implement the same reactive-streams specification) when examined deeper we identified some interesting caveats regarding Project Reactor implementation choices that we’d like to share.

RingBuffer

Since this article is focusing on LMAX’s Disruptor as much as Reactive programming, this one has to be the first point to list here. Reactor’s core library relies on the very same LMAX Disruptor RingBuffer library described in the first part of the article. This makes a lot of sense when you imagine a standard reactive pipeline. It is built from stages of processing – operators. And as we already know stages of processing, especially asynchronous ones have to have a coordination mechanism between them and a queue is not the best choice to use. Reactor uses the same LMAX library to solve this problem, which alone makes it incredibly fast3.

Operation fusion

With a lot of reactive operators chained together with different processing speeds, events have to pass through each stage and often buffered. To reduce an overhead of the Project Reactor ensures this process is still efficient by making the operators fusible:

- macro fusion: adds capability to replace two or more subsequent operators with a single operator, thus reducing the subscription-time overhead of the sequence. For example, if Reactor can statically deduce that the source will always contain a single element, redundant operators like merge() or concat() will not be instantiated4. Also, if multiple operators of the same type have been applied, Reactor will flatten them into a single one behind the curtains. For example, in pseudocode:

flux

.filter(test A)

.filter(test B)

.map(apply X)

.map(apply Y)

.subscribe()

becomes:

flux

.filter(test A && test B)

.map(apply Y(apply X))

.subscribe()Macro fusion mainly happens in assembly-time (when the reactive chain is constructed) so the overhead in subscription-time (when the data is actually passing though) is reduced. - micro fusion: the general idea behind micro fusion is that, when given a diverse set of operators that have to deal with queues or ring buffers for backpressure, the underlying data structure will not be instantiated separately for each of them, but instead a single compatible one will be used and shared between all of them.

Summary

To sum up we would like to give you a short list of pros and cons as well as differences between the two approaches and when it is best to use them.

| Disruptor Pattern | Project Reactor |

| Very specific and narrowly defined system requirements, the application architecture solves a single problem at a time | Serves multiple different requirements and is agile enough to be extended with changing business requirements while remaining highly efficient |

| Application architecture solves a single, prime concern, with all other concerns treated as auxiliary | Modular applications, with rich and heterogenous business logic processors that often influence each other. |

| Hard cap on the number of users or requests served is acceptable – maintaining lowest latency possible at all times is top priority | While low latency is still the primary concern, an additional business logic on top is needed to handle backpressure. The application is now a single element and is aware of the rate of producing and consuming of other components at all times so it can work more efficiently |

| Has a library containing a well defined data-structure and a protocol to use it efficiently | An entire programming paradigm focused on efficient propagation of change |

| Business logic has to be processed as a single atomic operation per each event | Business logic can be broken down into a pipeline of steps where each of the steps can be executed asynchronously |

| Business logic is a single central part of the system that all other parts have to adjust to – for example an order execution engine simply process events as soon as possible, without worrying that the other side may be too slow to consume results | Business logic elements can represent both a producer and a consumer. For example, a market data service always feeds other parts of the system (position and PnL tracking, trading strategies, execution algos) with the most up-to-date market data event possible, regardless of the individual rates of consumption of each of the components. Different components may also rely on different backpressure strategies (sometimes dropping events is allowed, sometimes it is not an option) |

| Simple API from the business logic perspective – the only way to consume an event is to accept and process that event | Robust API is provided for the event consumer – it may elect up front how many events it is interested in, its rate of consumption, events may be natively buffered, sliced or sampled in time |

| High testability of business logic and determinism – events may be replayed as they came in to reproduce any scenario that happened in production | High testability of business logic and determinism – events may be replayed as they came in to reproduce any scenario that happened in production.On top of that, event streams carry a notion of time – it is possible to recreate not only the sequences of events coming in, but also timing |

At AlgoTrader we use Project Reactor for a number of the core components in our system. We identified areas where Project Reactor is a natural fit, especially since we are dealing with a huge variety of event types, coming from multiple venues and data providers in real time. Different venues and data providers not only use different data models, but also different protocols and often stream data at various speeds. It is a challenge in itself to harness all such heterogeneous sources of data in real time, but also propagate events to end users in the most useful way. Project Reactor allows us to not only take advantage of improved latencies in reacting to events, but also allows us to extract the most useful information out of the incoming data while keeping our codebase modular and highly testable.

The AlgoTrader system is built out of many logical components, each of them providing business value to customers. It is of critical importance for us that each component operates as efficiently as possible and that there are no bottlenecks that could slow down any component of the system.

On top of that, we have to maintain flexibility to respond to rapidly changing requirements, it is not only our platform that is constantly evolving, but also our clients’ requirements are highly unique and often change over time. The flexibility of reactive programming helps us make our system easily adaptable, even to the most demanding requirements.